Most people know that when you sell or give away a computer, you should format its hard drive to make sure your sensitive information doesn’t fall into the wrong hands. And most of those people know that formatting a drive doesn’t actually erase all the data. Instead, you should use a special utility that overwrites every block of data on the drive. And a smaller portion of those people know that overwriting a block just once isn’t enough. If you really want to be safe, you should apply the Gutmann method, which overwrites every data block 35 times. And an even smaller portion of those people know that the Gutmann method is a myth.

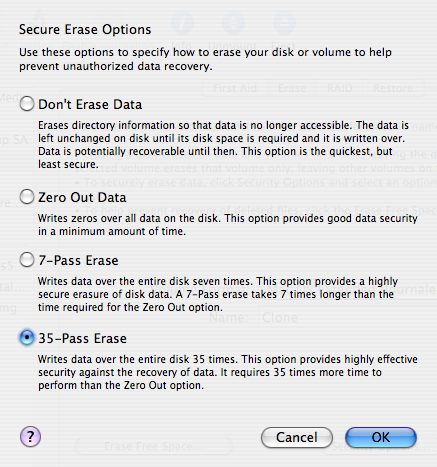

I had always thought that the idea of overwriting the same data block 35 times was a bit dubious. (Why would 35 times be secure but 34 times not be?) And yet, most disk utilities provide an option to erase a hard drive 35 times over.

In a recent Slashdot article, I discovered a comment that shed some light on this issue. User Psionicist wrote (with typos faithfully reproduced):

I would like to take the oppertunity here to debunk a very common myth regarding hard drive erasure.

You DO NOT have to overwrite a file 35 times to be “safeâ€. This number originates from a misunderstanding of a paper about secure file erasure, written by Gutmann.

The 35 patterns/passes in the table in the paper are for all different hard disk encodings used in the 90:s. A single drive only use one type of encoding, so the extra passes for another encoding has no effect at all. The 35 passes are maybe useful for drives where the encoding is unknown though.

For new 2000-era drives, simply overwriting with random bytes is sufficient.

Here’s an epilogue by Gutmann for the original paper:

Epilogue In the time since this paper was published, some people have treated the 35-pass overwrite technique described in it more as a kind of voodoo incantation to banish evil spirits than the result of a technical analysis of drive encoding techniques. As a result, they advocate applying the voodoo to PRML and EPRML drives even though it will have no more effect than a simple scrubbing with random data. In fact performing the full 35-pass overwrite is pointless for any drive since it targets a blend of scenarios involving all types of (normally-used) encoding technology, which covers everything back to 30+-year-old MFM methods (if you don’t understand that statement, re-read the paper). If you’re using a drive which uses encoding technology X, you only need to perform the passes specific to X, and you never need to perform all 35 passes. For any modern PRML/EPRML drive, a few passes of random scrubbing is the best you can do. As the paper says, “A good scrubbing with random data will do about as well as can be expectedâ€. This was true in 1996, and is still true now.

Looking at this from the other point of view, with the ever-increasing data density on disk platters and a corresponding reduction in feature size and use of exotic techniques to record data on the medium, it’s unlikely that anything can be recovered from any recent drive except perhaps one or two levels via basic error-cancelling techniques. In particular the the drives in use at the time that this paper was originally written have mostly fallen out of use, so the methods that applied specifically to the older, lower-density technology don’t apply any more. Conversely, with modern high-density drives, even if you’ve got 10KB of sensitive data on a drive and can’t erase it with 100% certainty, the chances of an adversary being able to find the erased traces of that 10KB in 80GB of other erased traces are close to zero.

So it seems my suspicions have been confirmed: You do not need to erase a hard drive 35 times before selling it on eBay. A quick zeroing out of the data is sufficient.