August 23rd, 2006 — Games, Personal, Software

This summer I’ve gotten into the habit of playing Warcraft III online. Unlike certain other online games that are all the rage these days, WC3 isn’t a timesink. Games only last about twenty minutes on average, and they’re self-contained, so you don’t feel like you have to keep playing to build up your character’s experience points or inventory or whatever.

My favorite format is the three-on-three pick-up game, in which the server selects six random people and forms them into two ad hoc teams. One such game was a bit more interesting than most, so I saved it as a replay. (You can play it back if you happen to own a copy of Warcraft III.) Here’s a play-by-play:

11:30 The opposing team masses an army of Level 2 units and rushes Orange.

14:15 Orange’s base is toast, and Teal delcares his team the winner. Orange gives up and leaves the game. It’s over! …or is it?

Orange faces destruction.

17:00 The opposing team now sets their sights on me. Purple advises that I retreat to his base, so I teleport there.

18:00 As my base is being destroyed, Purple and I counter-attack Red.

20:00 Caught off-guard, the opposing team tries to defend Red, but it’s too late. Red is annhilated, leaving Green and Teal. Armed only with Purple’s base and my five Chimeras, is victory possible?

20:30 I send my Chimeras for an end run around the back of Green’s base. He has no AA, so the Chims destroy his base easily. Only Teal remains!

22:30 My Chimeras make another end run, this time to the back of Teal’s base, destroying his gold mine.

27:00 Teal and Green attack us in a last-ditch effort, sending everything they’ve got. It’s a battle for the center!

It’s a battle for the center!

27:30 The opposing team’s Level 2 units are no match for our Level 3 Chimeras and Tauren! Green and Red give up.

28:00 Teal, deprived of gold by my Chimeras, has no units and admits defeat.

28:18 Victory! What a comeback!

August 17th, 2006 — Mac, Rants, Research, Software

My colleagues and I were finally able to publish our Autonet research at a conference in Chicago. Being the lead author, I hopped on a flight to the Windy City last Tuesday to present the results of our work. When I arrived at the hotel, I met the other two speakers scheduled for the afternoon session. The first one was busy setting up his Dell at the podium while the audience slowly began to fill the room. The second speaker had no laptop, only a USB drive with his slides; he was hoping to borrow the first speaker’s Dell.

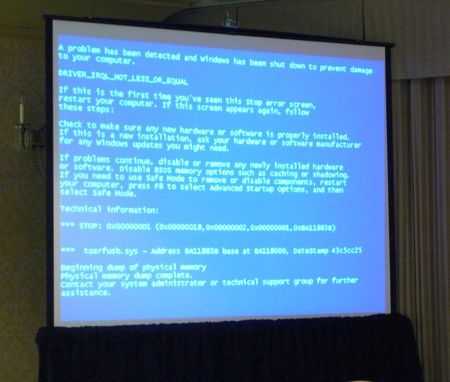

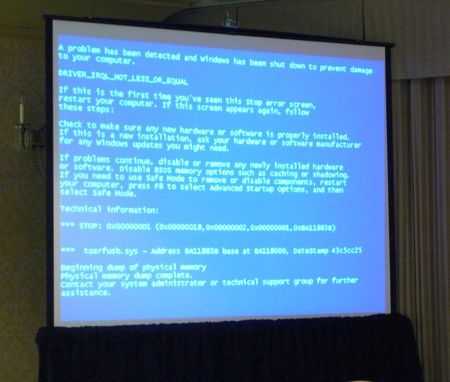

We were about two minutes away from showtime when the projection screen suddenly froze. Here’s what everyone in the audience saw:

It turned out that the guy’s Dell was crashing whenever he tried to start his PowerPoint presentation. Rebooting didn’t help. Windows insisted on giving him the Blue Screen of Death.

By this time, we were now late. Frustrated, the two speakers saw me typing on my Mac and asked if I could help. I walked up to the podium, plugged my Mac into the projector, loaded both of their presentations into Keynote, and returned to my seat. The first speaker then began his presentation using my PowerBook. Then the second speaker. Then me.

No BSoD. No crashing. It just worked. My Mac saved the day!

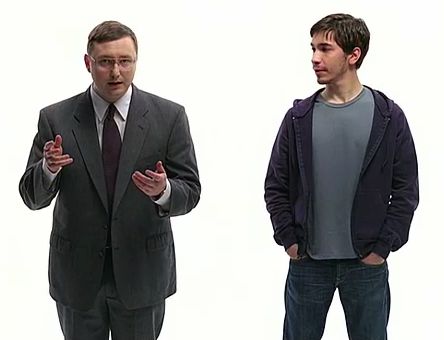

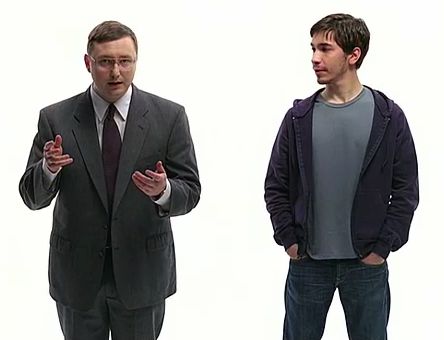

Hmm, that reminds me of an ad…

August 12th, 2006 — Personal, Software

I’m a regular reader of Dr. Dobb’s Journal, a monthly magazine covering the world of software development. One of my favorite columns in Dr. Dobb’s is “Nisley’s Notebook†because it mainly deals with my favorite topic: embedded systems. You can imagine my surprise, then, when I reached the end of the July 2006 column and saw my name:

Speaking of Windows ME, alert reader Trevor Harmon reminds me that it still allowed direct, user-mode I/O port control. Read more on FAT and the Microsoft patents at en.wikipedia.org/wiki/File_Allocation_Table.

I read that paragraph several times, thinking there must be some other Trevor Harmon who reads Dr. Dobb’s and had written to Ed Nisley. Then I suddenly remembered: I had, in fact, emailed Ed some time ago about his April 2006 column. Here’s how it went down:

In your April article in Dr. Dobb’s Journal called “Tight Code,†you state:

“The notion of a user program directly controlling an I/O port pretty much died with Windows 98.â€

Why Windows 98? I thought it was architecturally almost identical to Windows 95. Was there some significant difference in the Windows API that I’m not aware of? In any case, I thought direct control of an I/O port died much earlier, way back in the 1970s with the advent of UNIX and its abstraction of I/O hardware as a /dev file.

Thank you,

Trevor

To which Ed replied:

Direct I/O control died -with- Win98: that was the last Windows flavor allowing that sort of thing. WinME sorta-kinda did, too, but MS was revamping the driver model and (IIRC) discouraged using the Olde Versions.

Or maybe I just have a picket-fence error…

Indeed, the various Unix-oid systems did bring protected hardware to the university level, but the vast bulk of Windows boxes pretty much overshadowed all that. As far as the mass market goes, programmers were doing direct I/O from DOS right up through Win98 (or ME?), at which point everything they thought they knew went wrong.

Ed

I responded:

Ah, I read that as “was killed by Win98â€, as if Microsoft had developed some revolutionary product that nullified anyone’s desire for direct hardware access.

Yes, I believe ME, not 98, was actually the last Windows version to support direct hardware access. That’s one of the main reasons I was confused: It was the NT kernel, not Win98, that changed things in the Microsoft world.

Also, you used the word “notion,†and I would say that the notion died long before Windows ME even existed. Nobody (except maybe for game developers) supported the notion of direct hardware access, even though it continued to exist in legacy operating systems like Windows 9x. So I was doubly confused.

Trevor

And Ed concluded with:

Part of the job here is to write so that sort of thing doesn’t happen… I’ll drop a correction in the bitstream for the next column.

Thanks for keeping me straight!

Ed

So, this other “Trevor Harmon†mentioned in the column was me after all! I had completely forgotten about my conversation with Ed. Although I’ve published in Dr. Dobb’s before, it was still fun to see my name in print, especially in such an unexpected way.

August 9th, 2006 — Research

San Diego is the home to an elaborate research facility run by the U.S. Navy. Known as the Space and Naval Warfare Systems Center, it sponsors a yearly contest for university students. The challenge? Build a robot that can travel underwater and, without any human control, perform a series of tasks (a pipeline inspection, for example) within a given time limit.

Each year, teams of undergraduates come from around the world to showcase their designs for an autonomous underwater vehicle, or AUV, as they are called. The idea is to get students interested in the field of unmanned robotics and teach them what it’s like to be involved in a start-up venture. (Each team must provide its own funding for the contest.)

I had only learned about this AUV contest recently, even though I actually visited the center about two years ago. (My research group had been holding talks with the scientists there to discuss the possibility of collaborating on a project.) Because robotics is a field I’m increasingly interested in, I decided to visit the center on the last day of the contest, when the finals were being held, to see what it was all about and maybe catch a glimpse of some cool bots.

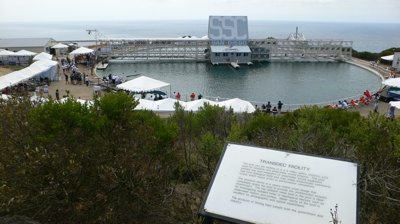

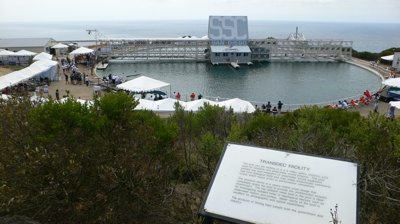

Unfortunately, there wasn’t much to see. Most of the action was hidden in the depths of this large murky pool.

Here’s a wide shot so you can get a feel for the size of the pool. On the left, you can see the large orange crane that was used for lowering the AUVs into the water.

As you can probably tell, there wasn’t much to look at. Even when an AUV was making a run, you couldn’t see what was going on, so it was kind of boring for spectators. Of course, the participants not lucky enough to make it to the finals didn’t have much to do, either. (I saw one team in a tent in the back playing cards with each other.)

Perhaps the most interesting moments were when a robot failed and had to be returned manually by the two divers shown here. A common occurrence, strangely enough, was that a robot would be released and then suddenly sink right to the bottom! I wasn’t sure if this was due to buoyancy problems or software problems.

All in all, I was glad I was able to witness the event, even though I didn’t stay long. I spent the rest of the day with my wife at San Diego’s other watery attraction.

Later, I found out from Slashdot that the University of Florida came away with the win.

August 3rd, 2006 — Ghana

As a Returned Peace Corps Volunteer (RPCV), I like to keep up with the Peace Corps Ghana community. One of the best ways of doing that, I’ve found, is through a Peace Corps Ghana mailing list, which currently has around 250 members. Sometimes RPCVs will use the list to exchange anecdotes or try to find old friends they’ve lost touch with. Mostly, though, the traffic comes from people who’ve just received their service invitation and have questions about what to pack, what to wear, and so on.

Some time ago, I realized that the same questions were being asked on the list over and over again. (“What should I pack?†is the perennial favorite.) The RPCVs on the list want to help, but it’s not fun going through the same Q and A routine for each new batch of volunteers. And it’s not so great for those who post the questions, either: Helpful advice might not come up in every new discussion, either because old knowledge is forgotten or because people simply don’t have time to mention all of their tips. Of course, there’s an archive of old messages, but searching through them to find answers is tedious and time-consuming.

Seems to me that new Peace Corps volunteers would love to have a single source for this collective wisdom, and RPCVs would love to stop repeating themselves, so I decided to kill two birds with one stone by creating a FAQ. The idea was to capture the most up-to-date information about Peace Corps Ghana. It would be a repository of helpful tips that any new volunteer should know but won’t find on the official Peace Corps website. (Examples: Is it wise to bring along my iPod? How do I mail myself something? Is it okay for men to have long hair?)

To make sure this FAQ would represent the combined knowledge of RPCVs, and not one man’s opinion, I set it up as a wiki that could be edited or expanded by anyone. And as a wiki, the FAQ would become a “living†document, never going out of date. (Theoretically, at least.)

In addition to the FAQ, I also added some poignant anecdotes written by Ghana RPCVs, as well as links to other websites related to Peace Corps Ghana. Having gone to all that trouble already, I thought, “Why limit this wiki to the Peace Corps? Why not make it for everything Ghana?â€

And that’s exactly what I did. I even registered its own domain name: ghanawiki.info. I started it off with a few brief articles on cheap flights to Ghana and how to get Ghanaian radio and TV over the Internet.

Hopefully, other Ghanaphiles will soon discover the wiki and help me create new articles and add more content to the existing ones. With luck, it may one day reach “critical mass†and become a key source of information for Peace Corps Ghana volunteers or anyone else wanting to learn more about the Ghana experience.

July 29th, 2006 — Mac, Software, Tips

Out of the blue, iMovie decided to stop working for me. I’d launch it, and it would immediately quit. Not crashing, just quitting. (No crash log was generated.) The dock icon wouldn’t even appear.

I knew it wasn’t a problem with the iMovie application itself because when I switched to another user account on the same machine, iMovie ran fine. It had to be something in my home directory that was causing the problem.

Normally, simply getting rid of any preferences or caches fixes this sort of problem. I checked my account and found these:

- ~/Library/iMovie/

- ~/Library/Caches/iMovie HD/

- ~/Library/Preferences/com.apple.iMovie.plist

- ~/Library/Preferences/com.apple.iMovie3.plist

Unfortunately, moving them out of my Library directory didn’t change a thing. iMovie was still quitting on launch. Frustrated, I took my PowerBook to the local Apple Store for some advice, but even the “genius†at the bar wasn’t able to help. I returned home thinking that I’d have to reinstall Mac OS X before iMovie would ever work again.

I decided to give iMovie one last try. On a whim, I dragged its icon out of the Applications folder and into my Desktop, then launched it from there. Imagine my shock when iMovie started up! I quit, moved the icon back to Applications, and launched iMovie again. It still worked! Everything was back to normal.

I have no idea why dragging iMovie out of the Applications folder solved the problem. In fact, it shouldn’t have worked. But it did. Assuming this is not some obscure and one-in-a-zillion issue, it may affect other Mac apps, not just iMovie, so I’m posting this tip in the hope that it might help others who encounter the same problem I did.

July 19th, 2006 — Gadgets, Software, Tips

TomTom Navigator 5 is a marvelous piece of software that can turn a PDA into a GPS navigation system. At $180, it’s somewhat expensive, but if you already have a PDA and get a cheap GPS receiver, you can end up saving hundreds of dollars over traditional stand-alone navigators.

For a few months now, I’ve been using it with my Treo 650, which means in addition to making calls, tracking finances, playing games, and surfing the web, my little smartphone keeps me from getting lost, too! I no longer have to ask for directions or look them up on the Internet; I just hop in the car, give Navigator an address, and it tells me exactly where to turn.

One of the more useful features in Navigator is that when you arrive at an interesting location, you can save your current GPS coordinates as a “Favorite.†You can then return to your Favorite at a later date without having to give Navigator an address. This feature is especially useful for remote locations that might not have an actual street address to begin with.

For instance, on a trip to South Dakota last week, my wife and I stayed at my parents’ cabin, which happens to be pretty deep in the woods, so I marked its location in Navigator as a Favorite. It wasn’t that I was worried about finding my way back; instead, I wanted to remember the coordinates of the cabin so that I could easily look them up in Google Earth when I returned home.

When I got back, I discovered that extracting those GPS coordinates was anything but easy. The Navigator 5 software won’t display the coordinates of a Favorite, and it doesn’t offer any documented way of uploading a Favorite to a computer. That meant I had to find an undocumented way.

After scouring numerous discussion boards and various websites, I finally cobbled together enough information on how to extract the GPS coordinates of a Favorite from TomTom Navigator 5. To save others from the trouble I went through, I’m posting a step-by-step guide below.

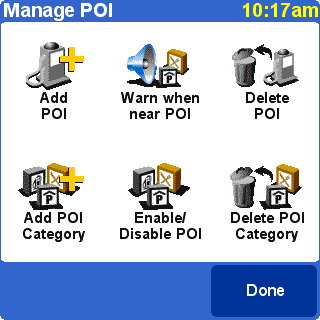

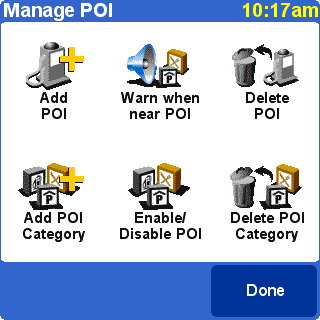

- Convert the Favorite into a Point of Interest (POI).

- Go to Change Preferences, then Manage POI, and choose Add POI Category.

- Type a name for the new Favorites category. (“Favorites†sounds like a good choice.)

- Choose a marker.

- Choose Add POI, then select the Favorites category.

- Choose Favorite and select the Favorite you want to convert to a POI.

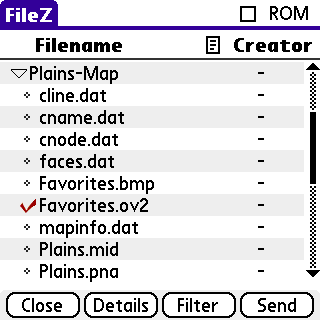

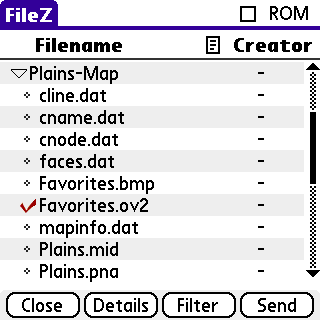

- Transfer the POI file to your computer. The easiest way to do this is with the FileZ utility.

- Launch FileZ, then view the contents of the external card.

- There should be a directory there called region-Map, where region is the map you are currently using (e.g., “Plainsâ€).

- Open that directory by tapping on the little triangle.

- Look for a file ending with “OV2†and having the same name as the POI category you created in the previous step (e.g., “Favorites.ov2â€).

- Transfer this file to your computer. The easiest way to do this is to tap the file to select it, then tap the Send button and choose the Bluetooth option. This will shoot the file through the air onto your computer (assuming, of course, that your computer supports Bluetooth, as all good computers do). If not, you can choose the VersaMail option to email yourself the file, or use any other means at your disposal.

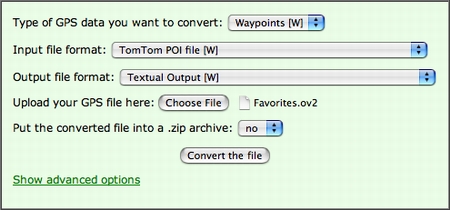

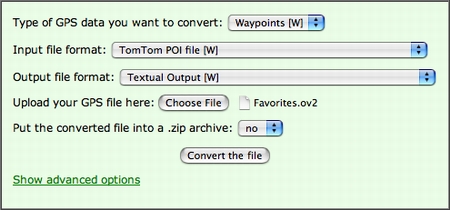

- Extract the contents of the POI file. The next step is to convert the OV2 file from its original binary form into something that mere mortals can read. The easiest way to do this is at the GPS Visualizer website, which provides a convenient front-end for the GPSBabel software.

- Go to the GPSBabel section of the GPS Visualizer website.

- For the input file format, choose “TomTom POI fileâ€.

- For the output file format, choose “Textual Outputâ€.

- Select the file (e.g., Favorites.ov2) that you transferred to your computer in the previous step.

- Click the “Convert the file†button.

That’s it! You should now see a list of your Favorites. The first column is the name of the Favorite; the second and third columns are its longitude and latitude. (The fourth column in parentheses is the location in UTM coordinates.) For example:

Cabin  N44 58.663  W103 35.017  (12T 617600 4890373)

Now that you know the coordinates of your Favorite, you can use them any way you wish. For instance, you can copy and paste them directly into Google Earth’s search box, and it will immediately fly you there.

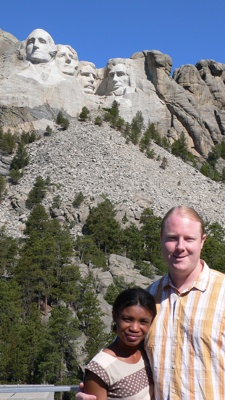

July 17th, 2006 — Personal

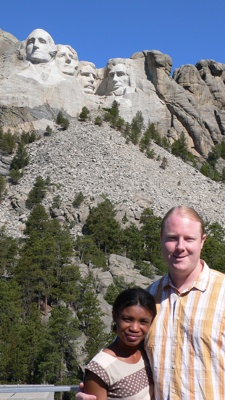

For our summer vacation, Yaa and I spent five days in the Black Hills for a family reunion. This was Yaa’s first time ever in that part of the country, and my first time in the last fifteen years, so it was quite an experience for both of us. Most of our time was spent in a fantastic two-story cabin in the middle of the woods that my parents had recently purchased.

From this “base camp,†we journeyed to Mount Rushmore, Crazy Horse, and a number of tourist traps in Hill City.

We spent one of our days at Sylvan Lake, where we hiked and rented a couple of paddleboats.

Later, I climbed Harney Peak, where I saw a mountain goat, a rainbow, and the old lookout tower that was used decades ago to watch for forest fires.

And before the trip was over, Yaa had made a new friend.

July 7th, 2006 — Software

Job hunting isn’t easy. Even in this technological age, the simple task of applying for a job online can be a time-killing chore. Companies often require applicants to apply via their website, and the process usually goes something like this:

- Type your first name

- Go to the next field

- Type your last name

- Go to the next field

- Type your street address

- Go to the next field

And so on and so on until you’ve painstakingly entered all the little bits of data from your résumé: employment history, references, skill set, and more. And that’s only for one company. If you want to apply somewhere else, you have to go through the process all over again!

It shouldn’t be this difficult. Just about everyone stores their résumé in an electronic format these days, so why can’t we simply upload the file to our potential employer? That would certainly make life easier for applicants, but employers would rather not have to support a dozen different file formats. Microsoft Word, for instance, comes in several different flavors, and when Word 2007 comes out next year, yet another file format will have to be dealt with.

One way to handle this issue is to require applicants to use a standard, open file format such as HTML or PDF. But that still doesn’t solve everything: Even with a standard file format, applicants make hundreds of different cosmetic choices for their résumés: Some put their education history near the beginning; others put it at the very end. Some might say they have a “Master of Science†degree while others just say they have an “MSâ€.

Human beings can handle these variations, but computers have a tough time processing them. And companies love to use computers to find applicants. They want to make queries like, “Show me all applicants with a master’s degree†or “How many applicants can write Java software?†But with all those variations, computers can easily get confused. They might think “MS†means “Mississippi†or that a person who was born on an island in Indonesia is actually skilled in a certain programming language.

What we really need, then, is some kind of special file format. Something that strips away all the layout and formatting of a résumé, leaving only the raw content. Each piece of data in the résumé could then be tagged with its meaning. A computer examining the data would be able to say, “Ah-ha, this is a graduation date,†or “I see, this is the name of a reference,†without even breaking a sweat. (Yes, I know computers don’t sweat. I like to anthropomorphize, okay?)

Luckily, this special file format already exists. It’s called HR-XML. Based on the popular XML format, it’s designed so that human resources departments have a common standard for storing and exchanging information about applicants.

But it’s not just for HR departments. Applicants can benefit, too. Instead of keeping track of different versions of the same résumé (say, an HTML version for posting to the web, a plain text one for sending via email, and perhaps a Word version for printing), you can store everything in a single “master†copy in HR-XML format. You can then let the computer automatically generate the version you want. And although HR-XML loses all of the beautiful fonts and formatting you may have created for your résumé, you can tell the computer how to add the cosmetic stuff when it generates an HTML or PDF version for you.

So…how does one accomplish all these tricks? One way is with the HR-XSL project, a collection of open-source software that helps job applicants take advantage of the HR-XML format. It was started on SourceForge back in 2002, but the original developer never quite got the project off the ground and abandoned it in 2003. I took over as administrator of HR-XSL a couple of months ago and did almost a complete rewrite of the code, releasing a new version yesterday with tons of new features and improvements on old ones. Check it out and let me know what you think.

Today, HR-XML is popular with human resources departments, but so far it hasn’t really caught on anywhere else. There are no major companies or job sites that accept résumés in the HR-XML format. Hopefully, with help from projects like HR-XSL, this will soon change.

June 19th, 2006 — Mac, Software, Tips

Disc images are a fairly common packaging standard for large software programs. If you want to try out a new Linux distribution, for example, chances are you’ll need to download a disc image in ISO format and burn it to a blank CD-ROM or DVD.

But ever since I began using Mac OS X, I’ve been perpetually confused about how to burn ISO images. I’m used to disc burning utilities that have an obvious, explicit command like “Burn ISO Image to CDâ€. To make life even more confusing, OS X’s Disk Utility does have a Burn command, but it becomes disabled when you click on a disc you want to burn to.

The problem here is that most Mac disc utilities, including the built-in Disk Utility, take a different approach when it comes to image burning. Instead of telling the program you want to burn an image, then choosing the file, you’re supposed to do the reverse: You choose the file, then tell the program you want to burn it.

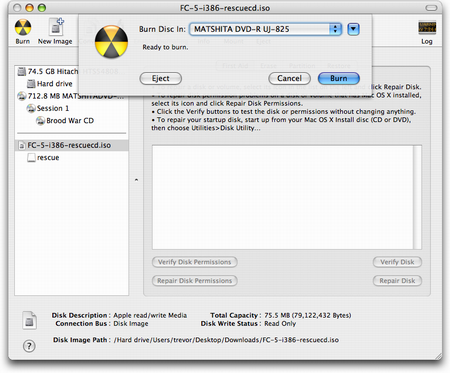

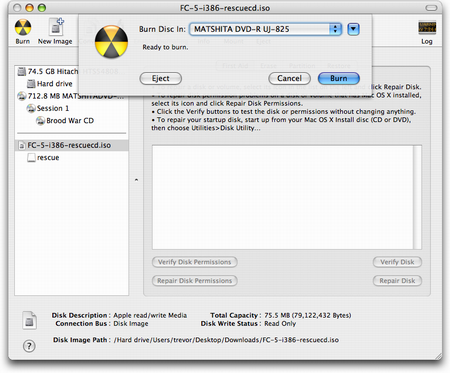

So, to burn an ISO image to disc, here’s what to do:

- Insert a blank disc.

- Start Disk Utility.

- From the File menu, choose Open Disk Image and select the ISO to be burned.

- In the list of volumes, you will now see an item representing the ISO file. Select it.

- Click the Burn button and follow the instructions.

That’s it! Sure, it may seem simple enough, but when you’ve been using Linux and Windows utilities for years, these steps can be a little perplexing and hard to remember.

UPDATE (6/22): This tip has been published on Mac OS X Hints.

Another slightly mystifying scenario is when you want to make a backup of a disc. Games, for instance, often require you to keep the original disc in your computer as a form of copy protection. Unfortunately, getting the disc out of its case every time you want to play can scratch it up. And of course, it’s simply inconvenient.

Wouldn’t it be great if you could copy an image of the disc to your hard drive, then somehow trick the game into thinking that the disc is inserted when it isn’t? Well, OS X can do that for you, but the steps aren’t obvious. Disk Utility requires you to make a number of choices: Do you copy from the CD volume, its session, or the drive itself? Do you create a “CD/DVD master†or a “read/write†image?

To clear things up, here are the exact steps to create a perfect image of a disc:

- Insert the disc.

- Start Disk Utility.

- You will see that three items have appeared in the list of volumes: The drive itself, one or more sessions, and the contents of the CD. Select one of the sessions.

- Click the New Image button.

- For Image Format, make sure “Compressed†is selected. Leave Encryption as “noneâ€. Click Save.

Disk Utility will then create a Disk Image (DMG) file for you. When the process is finished, you can eject the disc, then mount the image by double-clicking it. Ta-da! All programs will now think the image is the real McCoy, and you can put the true disc into storage for safekeeping.